Last week, intel gives two talks about Larrabee ISA called Larrabee New instructions (LRBNi).

Last week, intel gives two talks about Larrabee ISA called Larrabee New instructions (LRBNi).

The most significant thing to note is that Larrabee will expose a vector assembly, very similar to SSE instructions, but operating on 16 components vectors instead of 4. To program this, they will provide C intrinsics whose names that look… really weird !

C++ Larrabee prototype library: http://software.intel.com/en-us/articles/prototype-primitives-guide/

Intel provide headers with x86 implementations of these instructions to allow developers to start using these instructions now. But I can’t imagine anybody using this kind of vector intrinsics to program a data parallel architecture. As we have seen with SSE instructions, very few programmers finally used them, and only for very specific algorithm parts. So I think that these intrinsics will be only used to implement higher level programming layers, like an OpenCL implementation, that is for me a really better and more flexible way to program these architectures.

The scalar model exposed for the G80 through CUDA and the PTX assembly (and that will be exposed by OpenCL) uses scalar operations over scalar registers. In this model, the underlining SIMD architecture is visible through the notion of warps, inside which programmers know that divergent branches are serialized. Inter-threads communication is exposed through the notion of CTA (Cooperative Threads Array), a group of threads able to communicate through a very fast shared memory. Coalescing rules are given to the programmers to allow him to make best use of the underlining SIMD architecture, but the model is far more scalable (not restricted to a given vector size) and allows to write codes in a lot more natural way than a vector model.

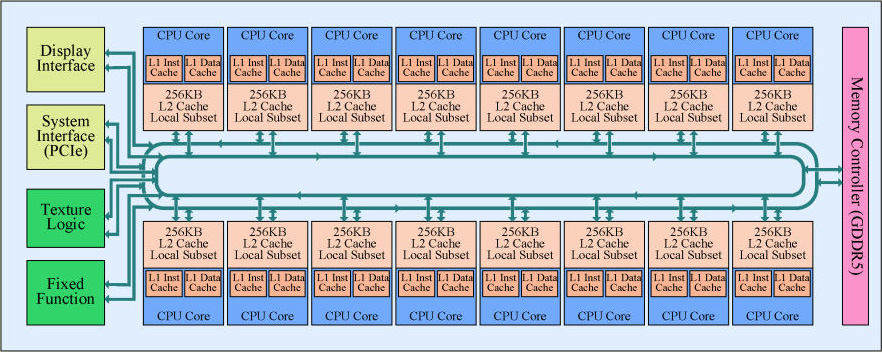

Even if, for now, Larrabee exposes a vector assembly, where the G80 expose a scalar one, only the programming model vary but the underlining architecture is finally very similar. Each Larrabee core can dual issue instructions to an x86 unit and 16 scalar processors working in SIMD, that is very similar to a G80 Multiprocessor, that can dual issue instructions to a special unit or 8

scalar processor working in SIMD over 4 cycles (providing a 32 wide SIMD). Larrabee exposes 16 wide vectore registers, where the G80 expose scalar ones, that are in facts aligned parts of vector memory bank.

The true difference before the two architecture is that Larrabee will implement the whole graphics pipeline using these general purpose cores (plus dedicated texture unit), where the G80 still has a lot of very optimized units and data paths dedicated to graphics operations connected into a fixed pipeline. The bet Intel is doing is that the flexibility provided by the full programmable pipeline will allow a better load balancing that will compensate the less efficiency of the architecture for graphics operations. The major asset they rely on is a binning rasterisation model, where after the transform stage, triangles are affected by screen tiles locality to the cores where all the rasterisation, the shading and the blending is done. Thanks to this model, they could keep local screen regions per cores in dedicated parts of a global L2 cache, used for inter-cores communications. That should allow efficient programmable blending for instance. But I think that even them don’t know if it will really be competitive for consumer graphics !

And even on that point, Larrabee approach is not so different from G80 approach, where triangles are globally rasterized and then fragments are spread among Multiprocessors based on screen tiles (cf. http://www.icare3d.org/GPU/CN08) for fragment shading, the diference is that z-test and blending are done by fix ROP units, connected to the MP via a crossbar (cf. http://www.realworldtech.com/page.cfm?ArticleID=RWT090808195242).

Finally, with these talks, Intel seems to present as a revolutionary new architecture something that for the major part has been here for more than 2 years now with the G80, coming with a programming model that seems really weird compared to the CUDA model. This is even more weird that Larabee may not be released before Q1 2010, and at this time NVIDIA and ATI will have already released their next generation architectures that may look even more similar to Larrabee. With Larrabee, Intel has been feeding the industry with a lot of promises, like the “it will be x86, so you won’t have to do anything particular to use it”, that we have always known to be wrong, since by nature the efficiency of a data parallel architecture comes from it’s particular programming model. If a proof was needed, I think this ISA is the one.

Intel GDC presentations: http://software.intel.com/en-us/articles/intel-at-gdc/

Larrabee at GDC, PCWATCH review: http://translate.google.fr/translate?u=http%3A%2F%2Fpc.watch.impress.co.jp%2Fdocs%2F2009%2F0330%2Fkaigai498.htm&sl=ja&tl=en&hl=fr&ie=UTF-8

Very good article about Larrabee and LRBNi: http://www.ddj.com/architect/216402188

October 12th, 2009 on 5:50 am

photostatic Just wanted to drop you a line to say, I enjoy reading your site. I thought about starting a blog myself but don\’t have the time.

Oh well maybe one day…. 🙂

October 13th, 2009 on 3:18 am

Online Stock Trading Hey, I really enjoy your blog. I have a blog too in a totally unrelated field (Online Stock Trading) but I like to check in here on a regular basis, just to see what\’s going on and it\’s always interesting to say the least. It\’s always entertaining what people have to say.